I often find myself in between the world of military language and the completely different language used in the information technology domain. Naturally it didn’t take long before I understood that term mapping or translation was the only way around it and that I often can act like a bridge in discussions. Understanding that when one side says one thing it needs to be translated or explained to make sense in the other domain.

Being an intelligence officer the term Business Intelligence is of course extremely problematic. The CIA has a good article that dives into the importance of defining intelligence but also some of the problems. In short I think the definition used in the Department of Defense (DoD) Dictionary of Miltary and Associated Terms can illustrate the core components:

The product resulting from the collection, processing, integration, evaluation, analysis, and interpretation of available information concerning foreign nations, hostile or potentially hostile forces or elements, or areas of actual or potential operations. The term is also applied to the activity which results in the product and to the organizations engaged in such activity (p.234).

The important thing is that in order to be intelligence (in my area of work) it both has to gone through some sort of processing and analysis AND only cover things foreign – that is information of a certain category.

When I first encountered the term business intelligence at the University of Lund in southern Sweden it then represented activities done in a commercial corporation to analyse the market and competitor. It almost sounded like a way to take the methods and procedures from military intelligence and just apply it in a corporate environment. Still, it was not at all focused on structured data gathering and statistics/data mining.

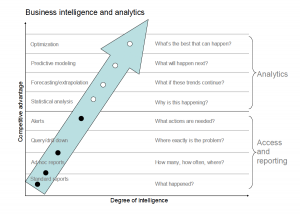

So when speaking about Business Intelligence (BI) in a military of governmental context it can often cause some confusion. From an IT-perspective it involves a set of technical products doing Extract-Transform-Load, Data Warehousing as well as the products in the front-end used by analysts to query and visualise the data. Here comes the first issue of a more philophical issue when seeing this in the light of the definition of intelligence above. As long as the main output is to gather data and visualising it using Enterprise Reporting or Dashboards directly to the end user it ends up in a grey area whether or not I would consider that something that is processed. In that use case Business Intelligence sometimes claims to be more (in terms of analytical ambitions) than a person with an Intelligence-background would expect.

Ok, so just displaying some data is not the same thing as doing indepth analysis of the data and use statistical and data mining technology to find patterns, correlations and trends. One of the major players in the market, SAS Institute, has seen exactly that and has tried to market what they can offer as something more than “just” Business Intelligence by renaming it to Business Analytics. That means that the idea is to achieve “proactive, predictive, and fact-based decision-making” where the important word is predictive I believe. That means that Business Analytics claims to not just visualise historic data but also claim to make predictions into the future.

An article from BeyeNETWORK also highlights the problematic nature of the term business intelligene because it is often so connected with data warehousing technology and more importantly that only part of an organisation’s information is structured data stored in a data warehouse. Coming from the ECM-domain I completely agree but it says something about the problems of thinking that BI covers both all data we need to do something with but also that BI is all we need to support decision-makers. The article also discusses what analysis and analytics really mean. Looking at Wikipedia it says this about data analysis:

Analysis of data is a process of inspecting, cleaning, transforming, and modeling data with the goal of highlighting useful information, suggesting conclusions, and supporting decision making.

The question is then what the difference is between analysis and analytics. The word business is in these terms also and that is because a common application of business intelligence is around an ability to measure the performance through an organisation through processess that are being automated and therefore to a larger degree measurable. The BeyeNETWORK article suggests the following definition of business analytics:

“Business analysis is the process of analyzing trusted data with the goal of highlighting useful information, supporting decision making, suggesting solutions to business problems, and improving business processes. A business intelligence environment helps organizations and business users move from manual to automated business analysis. Important results from business analysis include historical, current and predictive metrics, and indicators of business performance. These results are often called analytics.”

Looking at what the suite of products that is covered under the BI-umbrella that approach downplays that these tools and methods have applications beyond process optimization. In law enforcement, intelligence, pharmaceutical and other applications there is huge potential to use these technologies to not only understand and optimize the internal processes but more importantly the world around them that they are trying to understand. Seeing patterns and trends in crime rates over time and geography, using data mining and statistics to improve understanding of a conflict area or understanding the results of years of scientific experiments. Sure there are toolsets that is marketed more along words like statistics for use in economics and political science but those applications can really use the capabilities of the BI-platform rather than something run on an individual researchers notebook.

In this article from Forbes it seems that IBM is also using business analytics instead of business intelligence to move from more simple dashboard visualizations towards predictive analytics. This can of course be related to the IBM acquisition of SPPS which is focused on that area of work.

However, the notion of neither Business Intelligence nor Business Analytics says anything about what kind of data that is actually being displayed or analyzed. From a military intelligence perspective it means that BI/BA-tools/methods are just one out of many analytical methods employed on data describing “things foreign”.

In my experience I have seen that misunderstandings can come from the other end as well. Consider a military intelligence branch using…here it comes BI-software…to analyse incoming reports. From an outsider’s perspective it can of course seem like what makes their activity into (military) intelligence is that they use some form of BI-tools and then present graphs, charts and statistical results to the end user. Resulting from that I have heard over and over again that people believe that we should also “conduct intelligence” in for instance our own logistics systems to uncover trends, patterns and correlations. That is wrong because an intelligence specialists are both skilled in analytics methods (in this case BI) and the area or subject they are studying. However, since these tools are called Business Intelligence the risk for confusion is high of course just because of the Intelligence word in there. What that person means is of course that it seems like BI/BA-tools can be useful in analysing logistics data as well as data of “things foreign”. A person doing analysis of logistics should of course be a logistics expert rather than an expert in insurgency activities in failed states.

So lets say that what we currently know as the BI-market evolves even more and really assumes a claim to be predictive. A logical argument on the executive level to argue that the investment must provide something more than just self-serve dashboards. From a military intelligence perspective that becomes problematic since all those activities does not need to be predictive. In fact it can be very dangerous if someone is let to believe that everything can be predictive in contemporary complex and dynamic conflict environment. The smart intelligence officer rather need to understand when to use predictive BI/BA and when she or he definitely should not.

So Business Intelligence is a problematic term because:

- It is a very wide term for both a set of software products and a set of methods

- It is closely related to data warehousing technology

- It includes the term intelligence which suggests doing something more than just showing data

- Military Intelligence only covers “things foreign”.

- The move towards expecting prediction (by renaming it to Business Analytics) is logical but dangerous in a military domain.

- BI still can be a term for open source analysis of competitors in commercial companies.

I am not an native English speaker but I do argue that we must be careful to use such as strong word as intelligence when it is really justifiable. Of course it is still late for that, but still worth reflecting on.

![Reblog this post [with Zemanta]](https://img.zemanta.com/reblog_e.png?x-id=e2aa475d-53b8-4a43-a325-6058fd5bed25)

![Reblog this post [with Zemanta]](https://img.zemanta.com/reblog_e.png?x-id=09e90eca-a116-4f7f-9a99-dbc531ad2573)

![Reblog this post [with Zemanta]](https://img.zemanta.com/reblog_e.png?x-id=60eca183-4f6f-4f16-b39c-52c0145e24d1)