The Documentum stack is huge so this will be an overview. This more slide-oriented whereas the innovation track will feature more demos.

The Big Picture

Walks us through the stack based around different repositories

Model of the metadata on top of data. On the side of the slide you see a stack of supporting components he calls the “I/O Stack”.

- Multichannel Publishing (DocScience) 2way

- Unified Analytics Platform (Big Data with Greenplum) 2way

- Capture Services (Captiva) 1way

- E-discovery services (Kazeon) 1way

Move away from coding into configuration is the main goal.

Content Server focused on on-premise deployment (SOAP/REST) – single-tenant.

NGIS in the cloud where it is focused on REST – multi-tenant.

Second server architecture for the public cloud. Why? He sees different demands for public cloud.

The aim is to provide a multi-tenant solution where each added tenant cost as little as possible – preferably just another object.

For cloud D2 is first and xCP will be made available on the cloud later on.

Should be possible to design for the current stack – run it on the public cloud stack later on.

The mechanism for the transfer is XML – formalising the application model.

He has talks about a hybrid scenario – content supply chain – manage the extended enterprise with all suppliers as the use case.

D7 Performance Improvements

- Talking again about improved context switch performance

- Cache management has been improved -> Lower Memory Usage – Focused on Type Cache mainly

- Improve the number of active sessions you can handle

- Improve the response time

- Reduced the number of sessions on Oracle database

Will affect the number of Content Servers needed as well as database deployment. Drasticly reducing the number of sessions needed to support a certain number of concurrent users as well as almost not affecting response times 0-500 users. Again shows impressive charts where the scalability of Documentum is much more linear than before.

REST Strategy

- Big effort of implementing REST across the board

- Pure REST approach (must support XML, JSON and Atompub)

- Do not see it as an API but rather a design paradigm

- REST as SOA Strategy

- Discovery of services using resource linking

- ns.emc.com for describing web concepts

- Connecting resoursces accross products

The request service layer is an abstract layer.

New initiative called Linked Data Platform – Co-chair of the working group with IBM

REST and xCP – REST services provide access to xCP resources

xCP clients also use platform resoruces such as Folders, Documents and other Documentum types.

xCP clients consume xCP and platform serviecs.

Project Line of Sight

It is about Hyperic and the Documentum stack.

Immediate focus areas:

- Enable monitoring Documentum products famialies

- Linux and Windows

- 6.7 SP2 onwards

Future:

- -Captiva, DocSCI, Kazeon and other products

- Provide data analytics and corrective actions enablement

Linked to the bigger initiative with xMS – a new approach to deploying Documentum.

Automated process to instantiate the complex deployments. Not only initial deployments but also upgrades and patches.

Each machine has an hyperic agent that connect to the Hyperic Server where the monitored data end up and can be consumed by he Hyperic Portal where you can:

- Monitor

- Discover

- Alerts

- Correct

Immediate targets:

- Health and availability metrics

- Diagnotics and logging info

- Alertss and notifications

Later:

- Documtum platform (CS, BOCS, ACS…)

- XPlore and Webtop

- Supports 6.7 SP2 and onwards

Reflection: What will become of Reveille’s Documentum products? Is there an overlap?

xPlore and Federate Search Services 2

- xPlore 1.2 released Q4 2011

- Customized Processing (post-linguistic analysis using UMIA customization)

- Tokens are indexed based on different languages

- Since xDB is a schemaless database means that you can modify the datastructure with any model changes.

Have phased out FAST – 1000 deployments with xPlore now.

Federated Search Services extend the reach for search. FSS does not index – it provides access to other indices.

D2, the power of configuration

The concept of D2 – The power of runtime configuration. The idea is to be able to combine a subset of users with a subset of content and apply a configuration to that intersection. That becomes the context. EMC has seen a lot of common patterns in Webtop customizations. Better UI if you can trim it down. Makes it possible to have a very targeting user configuration.

xCP

Rapid Application Composition (not configuration as D2)

Integrated set of tools to provide that. Actually means that there will be implications on all three types of users:

- New User (D2 and Mobile for instance)

- New Application Developer (Information, Process, External Systems, Analytics Modeling and the Composisiton)

- New Administrator (xMS m.m.)

Defines a semantic model of the application. From that we generate an optimised runtime. Generates domain-specific REST Services.

xCP and the stateless BPM engine is mentioned as a big thing at this conference as well. More about that in the xCP session.

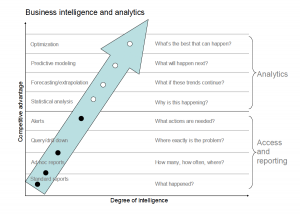

Big Data Analytics

Possible to integrate with Greeenplum in xCP 2.0 without any modifications. Works through JDBC Data Services configured in the xCP Builder that connects with Greenplum UAP.

The integration with DocSci is with Web Services.

Example of an insurance app where data is feed from a car devices monitoring driving behavior. Data is used to crunch large amounts of data into driver reports being output by DocSci and new insurance rates to be applied to the driver’s insurance.

The process defintion can be configured to interrogate the Greenplum Engine.

![Reblog this post [with Zemanta]](https://img.zemanta.com/reblog_e.png?x-id=e2aa475d-53b8-4a43-a325-6058fd5bed25)

![Reblog this post [with Zemanta]](https://img.zemanta.com/reblog_e.png?x-id=09e90eca-a116-4f7f-9a99-dbc531ad2573)